Reading in the detector data, and programming methods for that, is one of the more tedious tasks of any data reduction program. Those of you who write their own data correction programs know this all too well. Many detector systems store their data a little bit differently than the others, despite the availability of decent standards for storing images and their metadata (fortunately, some manufacturers are now using standard data storage formats). For example, the NIKA manual shows a rather lengthy list of formats for which support had to be written.

Since you do not want to spend your time writing the data read-in functions, but would rather focus on the actual data correction and reduction functions, some help would be appreciated. The fabIO package can help with this, developed by a group at the ESRF (with Jerome Kieffer, amongst others, who has been very patient answering my questions). This package is intended to help with reading some of the image formats spat out by some of the detectors on the market. Having played with it for a while I must say it is convenient and very helpful. The development of the core code appears complete, but there are regular updates with bugfixes and occasionally support for additional formats.

Best of all, it’s open source and well documented! Adding your own filetypes is recommended and may be helpful to us all. The project can be found on Github.

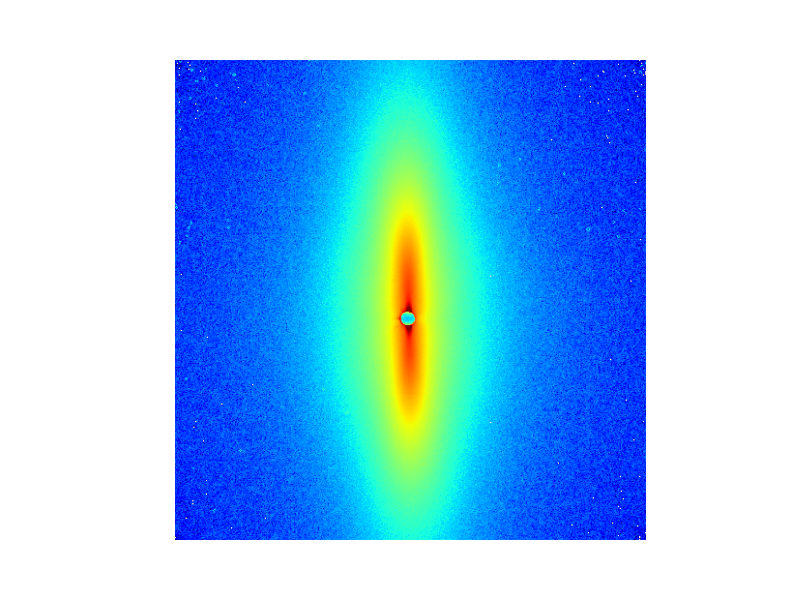

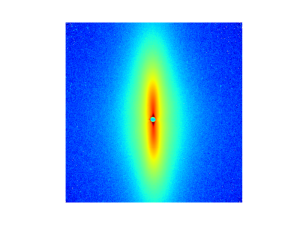

The second task that may require some time is the integration procedure for 2D data. Personally, I prefer to do most of the data corrections on the images followed by integration, but the reverse is also possible (for some corrections). Either way, data reduction is in order. To help with this (and to do this with the utmost speed), the same group have started developing an integration method, complete with limited data correction procedures. This project is called pyFAI, and can be found here.

The focus of that project has been the improvement of efficiency of the algorithms, making it especially suitable for large amounts of data. The speed is achieved using GPU acceleration and combining correction steps to require as few image operations as possible. This project has seen a steady stream of updates over the last months (though now quiet, probably due to holidays), and it is exciting to see what’s being discussed. I was quite happy to find out that there are efforts to (finally) describe the polarisation in proper Stokes parameters rather than the arbitrary definitions used up to now.

I hope to be able to use pyFAI in the future. It looks like I might have access to a pinhole-collimated instrument again after March, so I’m looking forward to that!

Leave a Reply