Nothing new here

So it seems science has beaten us to the punch once again. Remember last week’s optimistic story on how you can make better use of […]

So it seems science has beaten us to the punch once again. Remember last week’s optimistic story on how you can make better use of […]

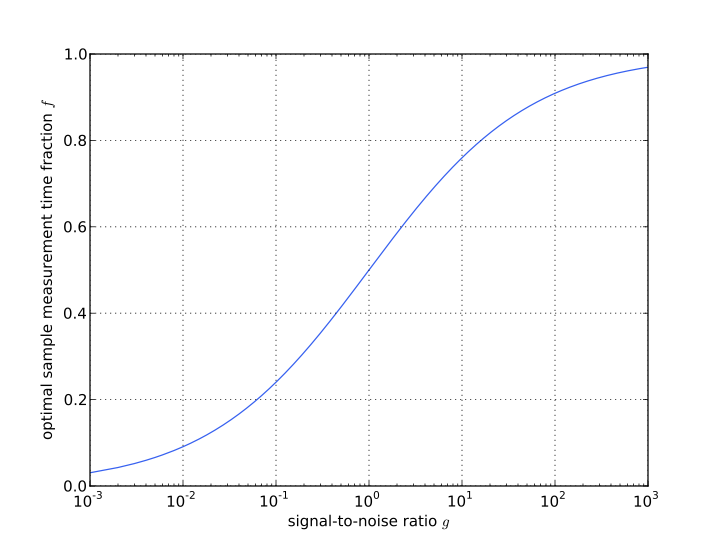

Often, especially when measuring on big facilities, you are given a limited amount of time. So when it comes to measuring the sample and the […]

It is with pleasure that I can announce the publication of another fibre-related work, right here (The electronic reprint will be made available after December on […]

A demonstration of the live Fourier Transform showing scattering patterns can be seen here:

So, I could not do what I promised last time, the Monte-Carlo fitting works on perfect simulated scattering patterns but is as of yet unable […]

I have written some small, simple bits of Matlab software that can generate scattering patterns in the range you request for polydisperse, dilute spheres or […]

Copyright © 2024 | WordPress Theme by MH Themes